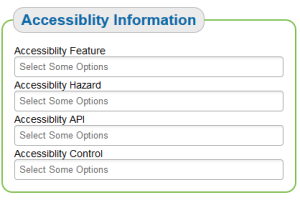

As the schema.org proposal receives more attention, there have been numerous questions concerning the utility of mediaFeature and accessMode for both the search and usefulness of accessible content. These questions are best answered with a demonstration. It turns out that WGBH built a repository for learning resources, which are tagged with information representing LRMI and Accessibility Metadata (the database was done with the original AccessforAll) tags. Madeleine Rothberg, Project Director for WGBH National Center for Accessible Media, recorded a short video (5:19) that shows the use of the accessibility metadata. You can watch the video (yes, it is captioned and there is a transcript at the end of this page) and see the following major points in the video.

- 00:30 An example search, where the accessibility properties (mediaFeature) are displayed.

- 01:20 Enter a new situation where the user can’t hear, possibly because the computer environment does not have computer speakers in the laboratory or because of deafness. Preferences are set for this.

- 01:57 Now that the profile is known, the search results show whether the content is accessible to that user or not.

- 03:10 Shows that an animation with no audio is flagged as accessible to this user even though it doesn’t have captions.

- 04:15 Search filters let you search on specific mediaFeatures.

I think you’ll agree that this information makes accessible content easier to find.

Their plans for future implementation would allow similar

searches without saved preferences, by offering search filters that

replicate the kind of personalized search the preferences allow, for

example by finding resources with either no audio content, or with all

auditory content adapted to other accessModes: only modes that had no audio or the auditory content adapted would display.

The magic that makes this happen is the accessibility metadata. I’ve included code snippets below for what these tags would be in the data if tagged today.

This demo was done with encodings that were predecessors to LRMI and Accessibility Metadata… I have translated the syntax and names to our schema.org proposed names.

For http://www.teachersdomain.org/resource/biot11.sci.life.gen.structureofdna

</pre>

<div itemscope="" itemtype="http://schema.org/Movie"><meta itemprop="accessMode" content="visual" />

<meta itemprop="accessMode" content="auditory" />

<meta itemprop="mediaFeature" content="captions" />

<span itemprop="name">The Structure of DNA</span>

<meta itemprop="about" />DNA

<meta itemprop="keywords" content="National K -12 Subject" />

<meta itemprop="keywords" content="Science" />

<meta itemprop="keywords" content="Life Science" />

<meta itemprop="keywords" content="Genetics and Heredity" />

<meta itemprop="keywords" content="Molecular Mechanisms of DNA" />

<meta itemprop="learningResourceType" content="Movie" />

<meta itemprop="inLanguage" content="en-us" />

<meta itemprop="typicalAgeRange" content="14-18+" /></div>

<pre>

For the silent video http://www.teachersdomain.org/resource/bb09.res.vid.dna/, you can see that the accessmode is now only visual, and that captions is not an adaption/ mediaFeature, as there was no auditory accessMode to adapt; the third and fourth line of the sample tags disappear.

</pre>

<div itemscope="" itemtype="http://schema.org/Movie"><meta itemprop="accessMode" content="visual" />

<span itemprop="name">DNA Animation</span>

<meta itemprop="about" />DNA

<meta itemprop="keywords" content="National K -12 Subject" />

<meta itemprop="keywords" content="Science" />

<meta itemprop="keywords" content="Life Science" />

<meta itemprop="keywords" content="Genetics and Heredity" />

<meta itemprop="keywords" content="Molecular Mechanisms of DNA" />

<meta itemprop="learningResourceType" content="Movie" />

<meta itemprop="inLanguage" content="en-us" />

<meta itemprop="typicalAgeRange" content="14-18+" /></div>

<pre>

The transcript of the above video can be found below:

This is a demonstration of accessibility features in Teachers’ Domain. Teachers’ Domain is a digital media library for teachers and students. It’s being transitioned to a new site, called PBS Learning Media, where the accessibility features may be a little bit different. So I wanted to show you how these accessibility features work right now in Teachers’ Domain.

If I do a search in Teachers’ Domain on a general topic, like DNA, I get back hundreds of results. Some of them have accessibility information available, and some do not. So, for example, here is a video that offers captions, so it’s labeled with having the accessibility feature of captions. And if I play that video, I’d be able to turn the captions on. Here are a lot of other resources that don’t have any accessibility information. Here’s one. It’s a document that includes the accessibility feature long description. So that means that images that are part of the document have been described in text thoroughly enough that a person who can’t see the images can make use of the document.

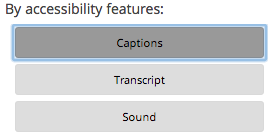

So this amount of information is really useful. If you’re scanning a bunch of results, you can look and see which accessibility features are offered for which videos. But it doesn’t give you the full story. For example, if you are a person who can’t hear, or you’re using video in a classroom without speakers, you might think that this video, called “Insect DNA Lab,” wouldn’t be useful to you because it doesn’t list that it contains captions. But what you can’t tell from this piece of information is that that video doesn’t have any audio at all, so it’s perfectly suited for use without audio, because there isn’t any. So in order to extract that kind of detail about how well different resources meet the needs of a particular teacher or students, we can set the features in the profile. So we go to My Profile, and scroll to bottom where there are Accessibility Settings. And right now none of the accessibility settings are set, and that is why we aren’t getting custom information. I set my accessibility preferences to indicate that I need captions for video and audio, and when transcripts are available, that I’d like those too. Now I’ve got a set of accessibility preferences that match the needs of a person who can’t hear, or can’t hear well, and might also match the needs of a school where there are not speakers on the computers in the computer lab.

So now if I repeat that same search for material on DNA, my resources that don’t have any Accessibility Metadata look just the same. But the resources that have Accessibility Metadata start to pop up with more information. So this resource, “DNA Evidence” is an audio file, and there are no captions or transcripts available. It’s labeled as inaccessible to me. This video, which we had already noted has captions, now has a green check mark and says that it’s accessible. Similarly, this interactive activity that doesn’t have any audio in it is fine. This DNA animation video that doesn’t have any audio is listed as accessible to me. So now with a combination of the metadata on the resources and my own personal preferences recorded in my profile, I can actually get much better information as I look through the set of search results about which resources will suit me best. And when it works properly in the player, you can also use this feature to serve up the video automatically with the right features turned on. So for example, if you look at this video about the structure of DNA, we know that it has captions and when we view the video those captions should come on automatically.

Another way that you can use the information in Teachers’ Domain is with the search filters. Here there’s a set of accessibility features that are listed for my search results. So in all of the search results about DNA, for which there are 202, I can see quickly here that five of those offer audio description, which is an additional audio track for use by people who are blind or visually impaired who can’t see the video, but can learn a lot from the audio. There are 76 resources that have captions, 13 with transcripts, and 8 that offer long description of images, so that those static images can be made accessible to people who can’t see them. So the faceted search is another way to quickly find resources if you know you are looking for a particular kind of resource, like one that has captions, or one that has descriptions. But the additional benefit of the accessibility checkmarks is that they alert you to resources that are accessible to you whether because they have a feature you especially need, like captions, or because they don’t have any audio in the first place and they don’t pose a barrier to your use.